2408212115 a generative workflow review of CRAFT

Clarity, Robustness, Action, Forethought, and TenX

It is mandatory to implement a {personal generative AI strategy}, to manage the several services we are now subscribed to. The {augmented workflow} is the phrase of the moment. What does the idea entail, is a question every one is trying to find an answer to. It is almost 21 months past after ChatGPT is introduced. During the early days it was about use case policies, with the rise in the number of LLMs a self declaration of how knowledge work is created by them is essential, in cases mandatory.

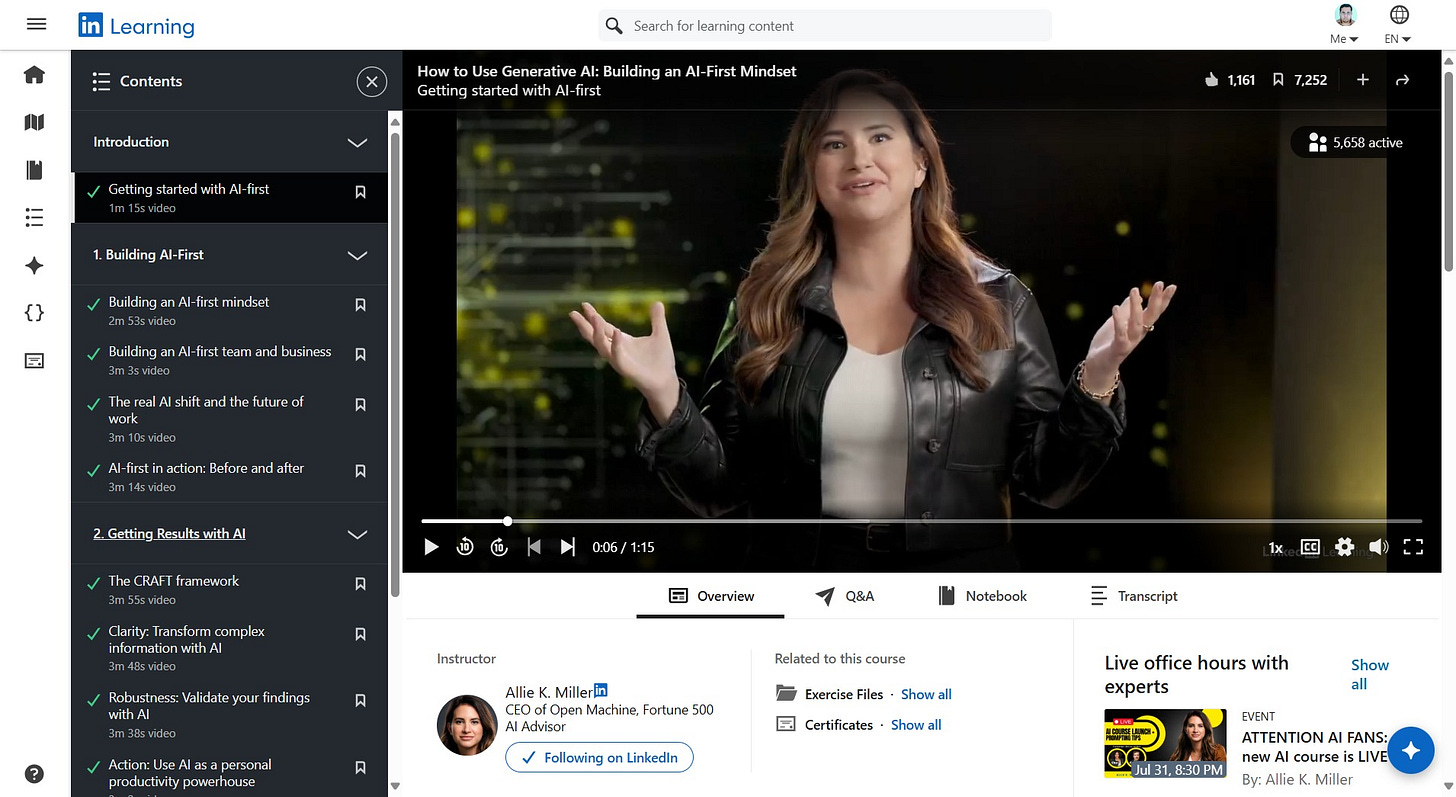

Workflows are not transferable across contexts. They need iterations and customisations to optimise it. CRAFT by Allie K. Miller is a one of such case-study. Clarity, Robustness, Action, Forethought, and TenX form limits of the framework. The more models of implementation such as these emerge the better it is to test a if they work for your specific implementation. Productivity grains to highlight is relative to context and the resources you have access to. Hence a subjective ideology.

CRAFT is applicable in situations where there are repetitive tasks of similar intensity. Work here is predictable and sufficient resources in place to make up for any errors of output. It is in that context its five elements need unpacking. Based on my pursuit to locate an ideal framework, my finding is a system limited within four to six parts for easy of implementation and manage associates tools. Costs of translating implementation strategies should also be accounted for.

Free and paid services are distinct in their offerings and there what you can do with them. ChatGPT has GPTs and Claude, projects both add a layer to the tools which considerably changes what is possible with them. The main difference between the two offerings is the context window to receive material and therefore the output possible. There is a sticker price attached to the AI mindset. The starter pack is free but if there is a need to scale operations, paying for the bots will deem inevitable.

Is CRAFT worth implementing, maybe. Clarifying ideas all services are very good at it. Check for consistency, robustness if you are paying it is better in both ChatGPT and Claude. The same is said of action planning with LLMs, more data you feed better are the plans. Foresight I may not recommend as the models keep updating therefore results are but a speculation. Calling brainstorming 10X is misleading as it can be accounted in, while clarifying. For my use case CRAFT is at 10% implementation.

Elements …

Clarity: Transform complex information with AI - 20%

Robustness: Validate your findings with AI - 5%

Action: Use AI for personal productivity - 5%

Forethought: Unlock the future with AI - 0

TenX: 10x your productivity with AI - 0

Extra.

LLMs are an information service sold as an application. The type of material generated is subject to the subscription. To replicate similar efficiency protocols, I propose is never really possible. A minimum two bots are essential, mostly because they are everywhere. Ideate ChatGPT, outline Claude, analyse ChatGPT, and edit Claude is by far the most utilitarian if both are paid for. Not much is written about the Office and the Workspace co-pilots, they too may be tested to augment knowledge work.

Productivity gains are not a given when a workflow is implemented or services applied. They need definition which is applied at the organisational or personal level. Generative AI is a hype lead digital transformation event. The services offered at this time of writing may not be the same in the future. We have had AI in miscellaneous forms in many of our applications before, this natural progression will continue. Skills of optimisation leading to productivity is a learning to take away from this phenomenon.

References & Links

Philosophy is crucial in the age of AI. As we reach AGI, philosophy can guide responsible AI development [Article]

we can be allowed to propose that, if AI alignment is the serious issue that OpenAI believes it to be, it is not just a technical problem to be solved by engineers or tech companies, but also a social one. That will require input from philosophers, but also social scientists, lawyers, policymakers, citizen users, and others.

PolyGraphs – Combatting Networks of Ignorance in the Misinformation Age [Study]

this interdisciplinary project investigates information sharing in social networks, aiming to understand how ignorance can arise even in groups of rational inquirers.

We have to stop ignoring AI’s hallucination problem. AI might be cool, but it’s also a big fat liar, and we should probably be talking about that more. [Article]

How to Become a Technical Writer – A Guide for Developers [Workflow]

6 ChatGPT Prompts to Enhance your Productivity at Work [Workflow]

A Great Start to the Day: Planning and Prioritization

Effective E-mail Management and Response Crafting

Nailing Executive Presentations at Meetings: Summarizing Key Points and Action Items

Getting a Better Understanding of Complex Information

Turning Brainstorming Ideas into Creatively Generated Content

Polishing your writing

How I Use ChatGPT as a Frontend Developer (5 Ways). With ChatGPT chat links and screenshots and final result [Workflow]

How to Run Llama-3.1🦙 Locally Using Python🐍 and Hugging Face 🤗 [Workflow]

The Great AI Unbundling. Why ChatGPT and Claude will spawn the next wave of startups [Essay]

AI at Wimbledon, ChatGPT for coding, and scaling with AI personas [Interview]

Goldman Sachs Gen AI report, Claude 2.0 Engineer, and RIAA lawsuits [Paper]

What's new from Anthropic and what's next: Alex Albert [Video]

How Packy McCormick Finds His Next Big Idea - Ep. 29 [Podcast]

Level Up Writing: Top 10 AI Rewording Tools. Struggling to rewrite content? AI rewording tools can help! Discover the 10 best & unleash your content's potential. Save time, write fresher & improve clarity. [List]

Lex AI: Full Review [Video]

Here’s how people are actually using AI. Something peculiar and slightly unexpected has happened: people have started forming relationships with AI systems. [Article]

AI language models work by predicting the next likely word in a sentence. They are confident liars and often present falsehoods as facts, make stuff up, or hallucinate. This matters less when making stuff up is kind of the entire point. In June, my colleague Rhiannon Williams wrote about how comedians found AI language models to be useful for generating a first “vomit draft” of their material; they then add their own human ingenuity to make it funny.

But these use cases aren’t necessarily productive in the financial sense. I’m pretty sure smutbots weren’t what investors had in mind when they poured billions of dollars into AI companies, and, combined with the fact we still don't have a killer app for AI, it's no wonder that Wall Street is feeling a lot less bullish about it recently.

The use cases that would be “productive,” and have thus been the most hyped, have seen less success in AI adoption. Hallucination starts to become a problem in some of these use cases, such as code generation, news and online searches, where it matters a lot to get things right. Some of the most embarrassing failures of chatbots have happened when people have started trusting AI chatbots too much, or considered them sources of factual information. Earlier this year, for example, Google’s AI overview feature, which summarizes online search results, suggested that people eat rocks and add glue on pizza.

And that’s the problem with AI hype. It sets our expectations way too high, and leaves us disappointed and disillusioned when the quite literally incredible promises don’t happen. It also tricks us into thinking AI is a technology that is even mature enough to bring about instant changes. In reality, it might be years until we see its true benefit.We need to prepare for ‘addictive intelligence’. The allure of AI companions is hard to resist. Here’s how innovation in regulation can help protect people. [Article]

How to build a successful AI strategy [Workflow]

An artificial intelligence strategy is simply a plan for integrating AI into an organization so that it aligns with and supports the broader goals of the business. A successful AI strategy should act as a roadmap for this plan. Depending on the organization’s goals, the AI strategy might outline the steps to effectively use AI to extract deeper insights from data, enhance efficiency, build a better supply chain or ecosystem and/or improve talent and customer experiences.

A well-formulated AI strategy should also help guide tech infrastructure, ensuring the business is equipped with the hardware, software and other resources needed for effective AI implementation. And since technology evolves so rapidly, the strategy should allow the organization to adapt to new technologies and shifts in the industry. Ethical considerations such as bias, transparency and regulatory concerns should also be addressed to support responsible deployment.

As artificial intelligence continues to impact almost every industry, a well-crafted AI strategy is imperative. It can help organizations unlock their potential, gain a competitive advantage and achieve sustainable success in the ever-changing digital era.Steps for building a successful AI strategy

Explore the technology

Assess and discover

Define clear objectives

Identify potential partners and vendors

Build a roadmap

Data

Algorithms

Infrastructure

Talent and outsourcing

Common roadblocks to building a successful AI strategy

Insufficient data

Lack of AI knowledge

Misalignment of strategy

Scarcity of talent

A guide to artificial intelligence in the enterprise [Workflow]

Developing Your AI Strategy: 12-Step Framework for Enterprise Leaders. Learn to design, test and deploy enterprise-scale AI systems that drive real revenue. [Workflow]

From Prompt Engineering to Agent Engineering. Introducing a practical agent-engineering framework [Workflow]

A generative AI reality check for enterprises [Workflow]

Take the time and locate the expertise to make good architectural choices

Small language models make the most sense for practical, detailed decision making

Your generative AI system is stupid because your data is stupid

Try to gain strategic advantage this time with a transformed data architecture, instead of kicking the can down the road again

Brace yourself for more security challenges, given more tech debt and complexity

Barriers to AI adoption. 7 obstacles to adopting GenAI tools in engineering organizations. [Workflow]

Taking a GenAI Project to Production [Workflow]

Power Hungry Processing: Watts Driving the Cost of AI Deployment? [Paper]

On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? 🦜 [Paper]

The Steep Cost of Capture [Paper]

NVIDIA at the Center of the Generative AI Ecosystem---For Now [Paper]

Atlas of AI. Power, Politics, and the Planetary Costs of Artificial Intelligence [Book]

Artificial Intelligence Is Stupid and Causal Reasoning Will Not Fix It [Paper]

ChatGPT is bullshit [Paper]

AI Art is Theft: Labour, Extraction, and Exploitation: Or, On the Dangers of Stochastic Pollocks [Paper]

The Dark Side of Dataset Scaling: Evaluating Racial Classification in Multimodal Models [Paper]

Into the LAION’s Den: Investigating Hate in Multimodal Datasets [Paper]

Nearly a third of GenAI projects to be dropped after PoC. At least 30% of generative AI (GenAI) projects will be dropped after the proof-of-concept (PoC) stage by the end of 2025, due to poor data quality, inadequate risk controls, escalating costs or unclear business value, according to Gartner. [News]

AI Snake Oil: What Artificial Intelligence Can Do, What It Can’t, and How to Tell the Difference [Book]

Frolicsome Engines, The Long Prehistory of Artificial Intelligence [Essay]

Why artificial intelligence needs sociology of knowledge: parts I and II [Paper]

Whence Automation? The History (and Possible Futures) of a Concept [Paper]

Public Computing Intellectuals in the Age of AI Crisis [Paper]

The belief that AI technology is on the cusp of causing a generalized social crisis became a popular one in 2023. While there was no doubt an element of hype and exaggeration to some of these accounts, they do reflect the fact that there are troubling ramifications to this technology stack. This conjunction of shared concerns about social, political, and personal futures presaged by current developments in artificial intelligence presents the academic discipline of computing with a renewed opportunity for self-examination and reconfiguration. This position paper endeavors to do so in four sections. The first explores what is at stake for computing in the narrative of an AI crisis. The second articulates possible educational responses to this crisis and advocates for a broader analytic focus on power relations. The third section presents a novel characterization of academic computing's field of practice, one which includes not only the discipline's usual instrumental forms of practice but reflexive practice as well. This reflexive dimension integrates both the critical and public functions of the discipline as equal intellectual partners and a necessary component of any contemporary academic field. The final section will advocate for a conceptual archetype--the Public Computer Intellectual and its less conspicuous but still essential cousin, the (Almost) Public Computer Intellectual--as a way of practically imagining the expanded possibilities of academic practice in our discipline, one that provides both self-critique and an outward-facing orientation towards the public good. It will argue that the computer education research community can play a vital role in this regard. Recommendations for pedagogical change within computing to develop more reflexive capabilities are also provided.

Open (For Business): Big Tech, Concentrated Power, and the Political Economy of Open AI [Paper]

The AI hype machine [Podcast]

Journalism as an Institution in the Age of AI [Presentation]

37C3 - Self-cannibalizing AI [Presentation]

Toward AI Realism. How do we talk about generative AI productively? People tend to feel a way about it, conversations run on vibes, and no one seems to know very much. Critics point out that models run on non-consensually harvested data, reproduce biases, and threaten to corrode our collective history, that scanners routinely fail to identify Black faces, bullies use deepnudes to harass women and gender non-conforming people, artists are being put out of work, education has been upended. And for what? [Essay]